Sixfold Content

Sixfold News

AI Adoption Guide for Underwriting Teams

Over the past few years, we've worked with more than 50 underwriting teams to bring AI into their day-to-day operations. So we've put together a 5-stage guide that covers what successful AI adoption actually looks like, from picking the right starting point to building trust and scaling across your team.

.png)

Explore all Resources

Stay informed, gain insights, and elevate your understanding of AI's role in the insurance industry with our comprehensive collection of articles, guides, and more.

.png)

Sixfold's 100 Billion Token Milestone

Sixfold was featured at OpenAI’s Dev Day Keynote, recognized for crossing 100 billion tokens processed. We sat down with our Senior AI Engineer, Drew Das, to talk about what that milestone really means for underwriting.

Sixfold was featured on the big screen at Open AI’s DevDay 2025, recognized for surpassing 100 billion tokens processed. To make it even better, Senior AI Engineer, Drew Das, was honored among global developers for his contributions.

This is a huge milestone for Sixfold, a reflection of the real impact our AI is making in underwriting. But what does 100 billion tokens actually mean in practice? We sat down with Drew to find out.

Can you start by explaining what exactly OpenAI recognized Sixfold for at Dev Day, and what it felt like to see your name up on the screen?

Seeing my name on stage felt like public validation of the engineering team’s hard work. Sixfold is operating at the frontier of large-scale AI in insurance underwriting. We’re not a pilot anymore; we’re in production.

It also hit home the responsibility that comes with it. Yes, we’ve built scale, but now we have to make sure what we produce is high quality and meaningfully used. Sixfold isn’t experimenting with LLMs; we’re running them reliably where accuracy, latency, and auditability really matter.

We’re not a pilot anymore; we’re in production.

.png)

What does “100 billion tokens” actually represent in Sixfold’s day-to-day work?

Each token is a fragment of understanding, a piece of text, data, or context that our models interpret and turn into something underwriters can act on.

It signals the volume of underwriting data, documents, broker submissions, and risk information that our platform analyzes and processes through generative AI workflows.

Hitting 100 billion means we’ve moved many workflows off manual underwriting review and into AI. When repetitive work is reduced, underwriters spend more time on relationships, strategy, and higher-value decisions.

Hitting 100 billion means we’ve moved many workflows off manual underwriting review and into AI. When repetitive work is reduced, underwriters spend more time on relationships, strategy, and higher-value decisions.

So… what’s next, another 100 billion tokens?

Token count is becoming table stakes. What matters now is how those tokens are applied and the outcomes they create.

We’re focusing on deeper workflow integration, bringing AI agents that go beyond risk assessment to support decisions, automate workflows, and deliver real-time underwriting intelligence. Our Research Agent is a great example of that.

Considering the high-stakes nature of insurance, every risk insight and decision generated by Sixfold must be auditable and traceable. Our solution isn’t just built for scale, but for governance.

.png)

On the engineering side, what does it take to run something at that scale?

From an engineering perspective, scale brings complexity. We’re constantly balancing latency, cost, and accuracy.

Many AI projects stop at the “cool demo” stage, but we’re pushing through the messy engineering: hybrid search, re-ranking, prompt tuning, and evaluation, all happening under the hood.

Scaling means better signals, more edge cases surfaced, and faster model learning. It’s a flywheel that keeps getting smarter.

Scaling means better signals, more edge cases surfaced, and faster model learning. It’s a flywheel that keeps getting smarter.

.png)

Referral Agent: From “To-Do” to “Done” in Seconds

Sixfold is shifting from insight to action. Our AI has helped underwriters surface risks, highlight insights, and guide decisions, but now we’re taking the next step with a suite of agents designed to clear bottlenecks in the underwriting process. First up: Referral Agent.

Since AI’s introduction in underwriting, it has been about surfacing information: flagging risks, highlighting important insights, and providing recommendations. But there's always been a gap between AI that knows what needs to be done and AI that actually does it.

Sixfold is building AI agents that close that gap: agents that work alongside underwriters to move cases forward autonomously. Referral Agent marks a shift in what Sixfold can do for your underwriting team, starting with one of the biggest bottlenecks we hear about from underwriters: referrals.

“We’re no longer just telling underwriters what to do; we’re helping them do it. This launch represents a major shift in how we drive efficiency and consistency in underwriting — turning “here's what you need to know’ into “here’s what we’ve already done about it”. The Referral Agent is just the beginning of that vision.”

- Alex Schmelkin, Founder and CEO of Sixfold

Where 40% of Cases Get Stuck

When we asked underwriters about their biggest workflow frustrations, referrals came up again and again.

Every underwriter knows this routine: you spot a case that needs escalation, and suddenly you're building a package. Pull the rules. Cross-reference submission details. Write a detailed email explaining your reasoning. Make sure the approver, such as senior underwriter, has everything they need to make a decision.

It's necessary work, but it takes about an hour per case. With up to 40% of applications requiring escalation, underwriters can spend half their day packaging referrals instead of evaluating risk.

The ripple effects hurt everyone. Brokers wait days for responses while referrals sit in approval queues. Approvers get packages missing key details and have to circle back with questions. What should be a quick handoff becomes a multi-day process that slows everyone down.

AI That Completes the Loop

Referral Agent transforms how this process works. Instead of stopping at identification that a case needs referral, it handles the next steps as well. It executes referrals on its own: flags when a case needs escalation, assembles the complete referral package and drafts a ready-to-send email in your preferred tone.

The underwriter's role changes from package preparation to package review, freeing up time from admin work and letting them focus on more strategic decisions.

The repetitive process that used to take 60 minutes? It now takes 1 minute.

How it works

.png)

Sixfold knows the insurer’s unique risk appetite, applies what it’s learned, and clearly shows if and why a referral is required after case analysis is completed.

When the agent flags a case for escalation, it constructs a complete referral package in email form: a business summary including the company's operational focus and a structured rationale section that walks through each referral guideline breach step-by-step, citing the exact percentages, dollar amounts, and policy thresholds that might have triggered the referral requirement.

With this launch, we’re making the underwriter’s inbox part of the workflow. Underwriters can just forward a broker’s email, with attachments, straight to Sixfold, and the risk assessment kicks off automatically. When it’s time to refer, a pre-drafted email is done, ready to be sent with a single click from the underwriter’s default email provider.

Benefits by Role

.png)

For Referrers

- Always know when to refer

- No more repetitive referral tasks

- Hours back every day for actual underwriting

For Approvers

- Every referral is standardized, making it easier to review

- With complete referral packages, you can decide faster - often same-day

- Automatic audit trail for compliance and training

For Insurers

- Referral guidelines are applied consistently across every team

- Quotes out faster → happier brokers → improved hit ratios

- A process that scales with every underwriter hired

The Broader Vision

Referral Agent is part of a shift in what Sixfold can do for your team. In 2024, Sixfold gave underwriters a brain that reads and assesses; now Sixfold equips them with a brain that acts: AI agents that move cases forward on their own.

Each Sixfold agent plugs into your existing workflow to solve a specific bottleneck. Backlog of referrals? Deploy Referral Agent. Team spending hours researching online? The Research Agent does it in minutes. Endless broker negotiations slowing you down? The Negotiation Agent (coming soon) handles it. Use them individually or stack them together,

“Every underwriter knows that sinking feeling of a growing referral queue. With the Referral Agent, that queue clears itself. It proves that AI can take action, not just give advice. It's the first of many agents that free underwriters from processor work so they can become portfolio strategists."

- Lana Jovanovic, Head of Product @Sixfold

Experience It Live: Try Referral Agent at ITC Vegas in October. Forward an email, watch the risk assessment run, and generate a referral in real time. Book your ITC meeting with Sixfold, or schedule a digital demo.

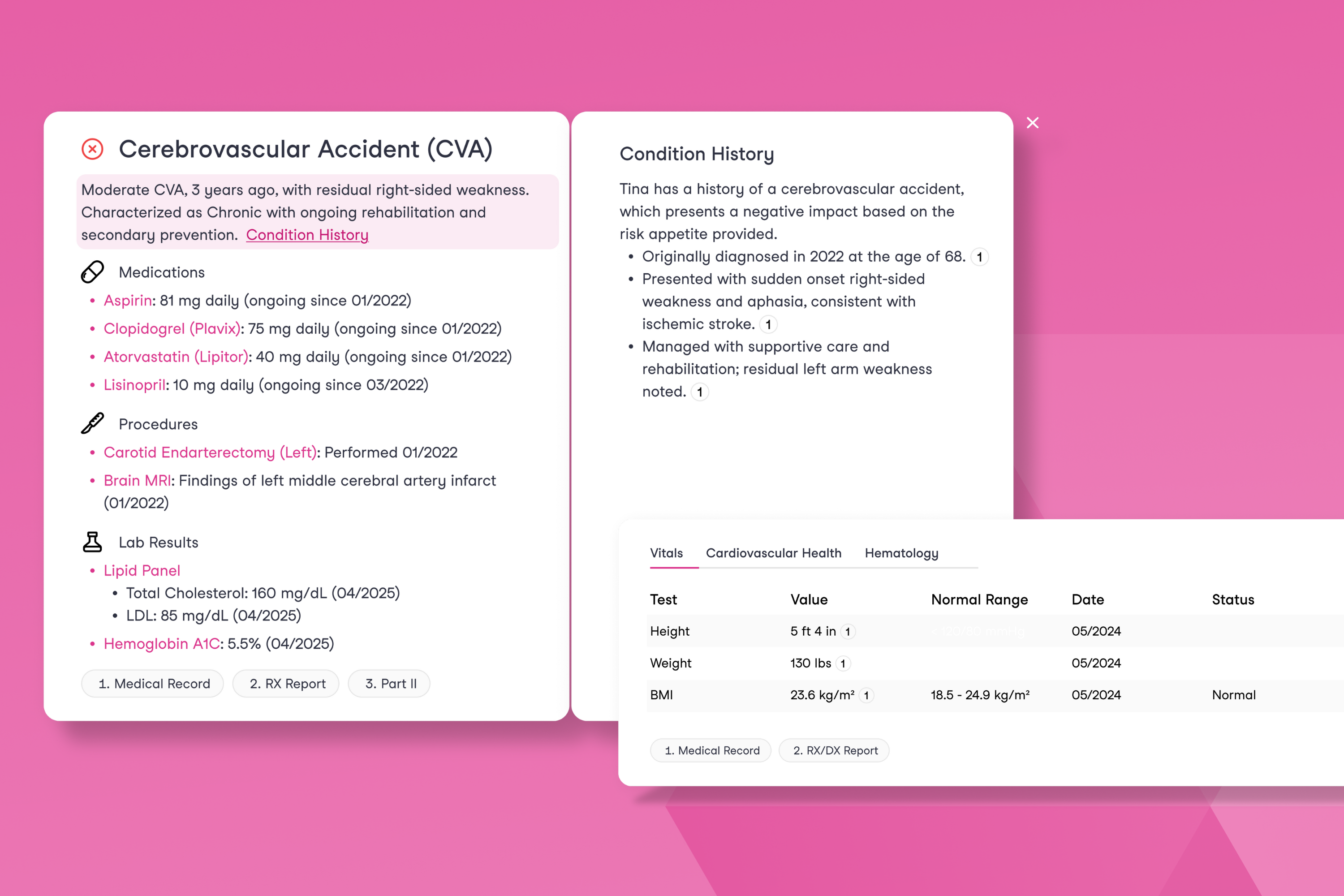

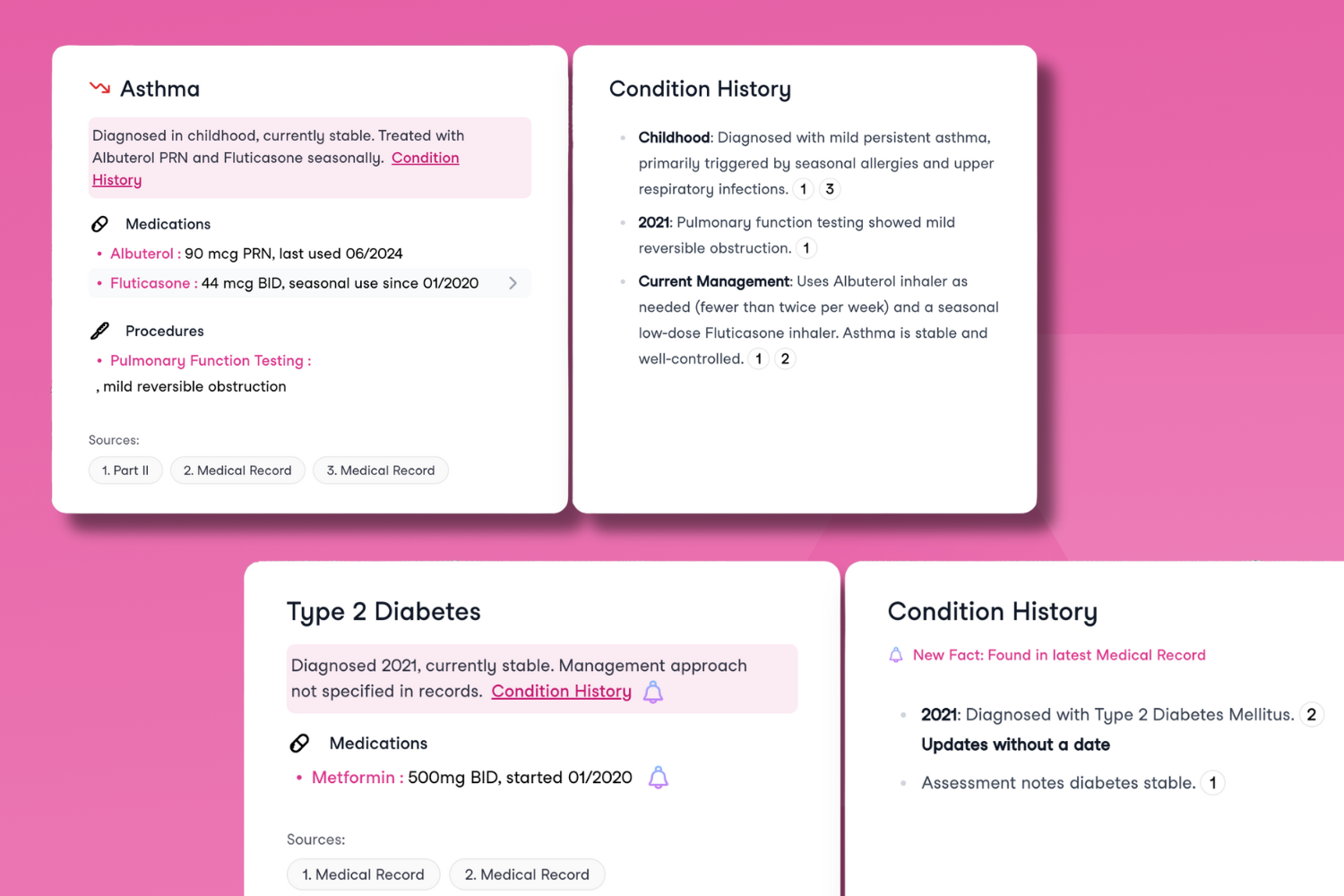

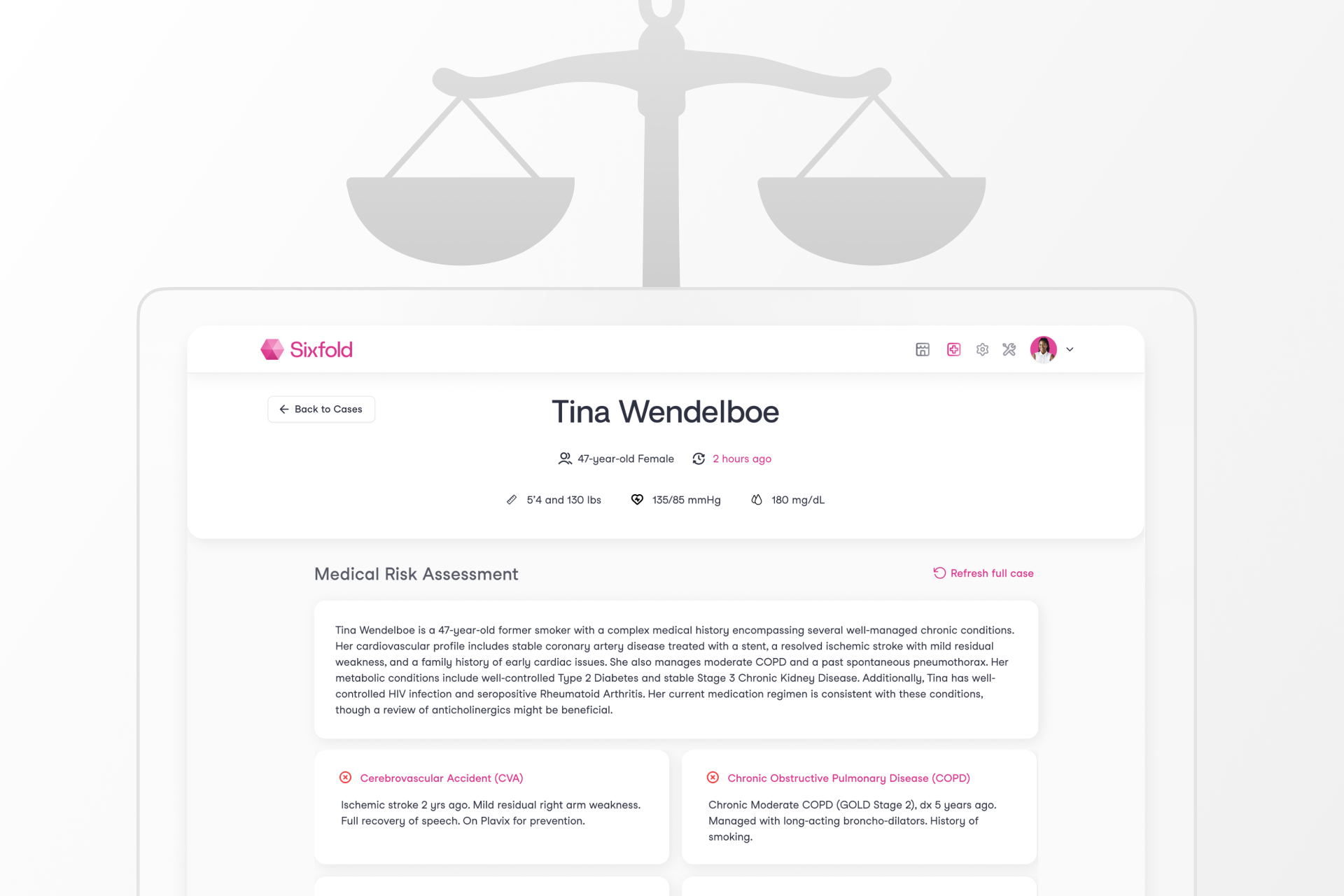

New in Life & Health: Clear Condition Stories with Clinical Insights

Introducing Conditions and Core Clinical Data for Life & Health: Our latest update helps underwriters see the full picture, faster. Conditions tie related facts to a diagnosis. Core Clinical Data brings key lab results into one view. The result? Quicker reviews, clearer decisions, and better outcomes.

Life & Health underwriters often have to go through hundreds of pages of medical documents to understand an applicant’s health profile, knowing that one missed detail could lead to the wrong coverage decision.

When we introduced our Life & Health Underwriting AI, we set out to give underwriters everything they need in one place: diagnoses, medications, and procedures pulled from applications and supporting documents, aligned to the insurer’s unique risk appetite. They no longer had to spend time manually going through medical documents.

But after getting feedback from underwriters across global insurers, we realized something important: presenting information alone isn't enough. What they really need is the full health story, with all the pieces connected. For example, if a medication appears, they need to know the full context around it: how often it was prescribed and any related diagnoses or procedures.

That’s why we’re upgrading our Underwriting AI with Conditional Insights and Clinical Data, designed to reflect the way underwriters think about the overall health profile.

What’s New?

Conditions: From Medical Facts to the Full Story

Conditions completely change how Sixfold’s insights are presented to underwriters.

Previously, all relevant data, like Personal Health, Medications, Procedures, and more, appeared as individual facts. While surfacing this information is essential, it didn’t show underwriters how it all connected.

The thing is, Life and Health underwriters don't analyze each fact in isolation; they think about how it fits into the bigger picture. What does this medication suggest? How severe is the condition? How does it all connect?

That’s why Sixfold now brings together all relevant information under a diagnosed condition, including:

- Medications and ongoing treatment

- Procedures and lab results

- The condition’s full history, with context and progression over time

For example, take Gastritis, an inflammation of the stomach lining that can cause pain, indigestion, and discomfort. An applicant might have been diagnosed with it a while ago, is taking medication such as Pantoprazole, and could have undergone procedures like Endoscopies five years ago.

Instead of treating these details as in isolation, Sixfold now recognizes they’re all part of the same conditions story. This mirrors exactly how an experienced underwriter would naturally connect the dots, seeing not just health facts, but the bigger picture of an applicant’s health history.

“This release has been a huge focus for our team, and the feedback from early users has been very positive. They’ve shared firsthand how impactful it is to have a single experience that brings together every aspect of an applicant’s medical history.”

- Noah Grosshandler, Product Manager at Sixfold.

Want a closer look at how Conditions work? Join our live product demo on September 11th.

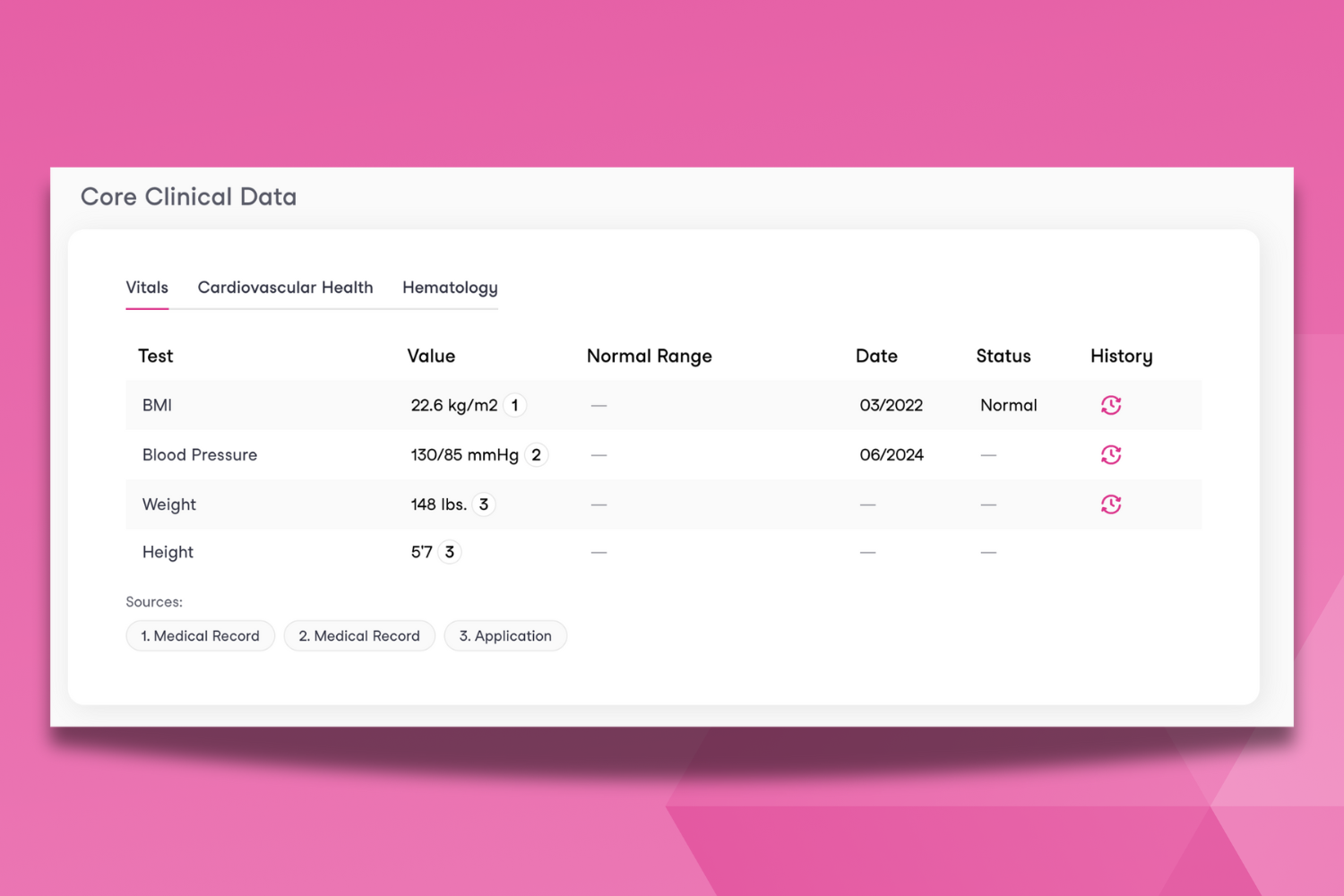

Core Clinical Data: Lab Insights and Historical Trends

Underwriters often have to go through dozens of lab results to answer: Does this applicant have an underlying condition? Are the results concerning? Could they worsen over time?

Core Clinical Data brings all of that information into one place, instantly. It pulls information found in lab results and medical records uploaded to Sixfold, presenting a pre-defined set of health indicators commonly tied to high-risk conditions such as: Vitals, Cardiovascular Health, and Hematology.

It gives underwriters instant and standardized insight: lab values, normal ranges, and historical progression, making it easier to assess the presence, progression, and severity of chronic conditions.

The impact? Core Clinical Data gives underwriters a snapshot of the applicant’s health at a glance, meaning time saved in reading lab reports that can be used to actually bind accounts. With immediate access to historical trends and key lab indicators, underwriters can make faster and more accurate decisions.

How Guardian Cut Review Time in Half

Guardian, one of the largest life insurers in the U.S., faced a common challenge: manual case reviews slowed down underwriters and created bottlenecks in the underwriting process.

By adopting Sixfold for its Disability line, Guardian was able to automatically extract medical data, triage information faster, and speed up case assessments end-to-end.

The impact: a 50% reduction in review time, freeing underwriters from being stuck reviewing medical records and letting them focus on risk decisions. Read more about the results Guardian has seen here.

Now, Guardian is expanding the program across more business lines, and you can see why! Join our Life & Health Product Demo on September 11th, where we’ll showcase how Sixfold works for life & health insurance underwriting.

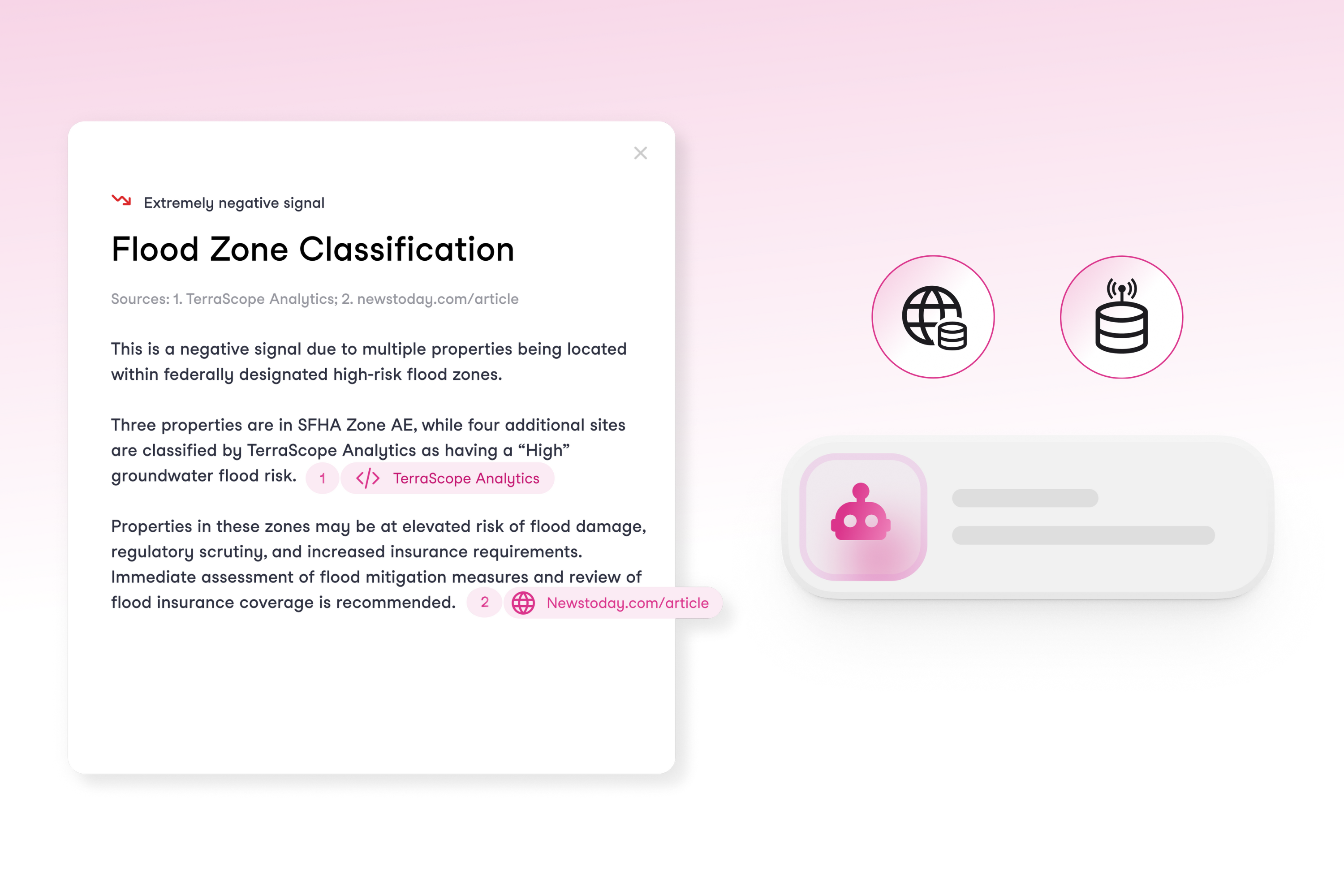

Research Agent: From Hours of Digging to Seconds of Insights

Meet Research Agent, underwriters’ new research partner. It spots the gaps in a submission, finds the missing details, and delivers the insights that matter most for the decision.

In commercial underwriting, submissions rarely contain everything underwriters need to properly assess risks. Critical information such as SEC filings, cyber incidents, litigation history, or executive misconduct aren't in the application, and finding that data falls on the underwriter.

The stakes are high: miss one key detail, and underwriters are suddenly pricing a completely different risk. The research to uncover this information means hours going through public sources such as news archives and databases.

All while brokers demand quotes fast in an increasingly competitive market.

Why We Built it

With Research Agent, underwriters have a research partner who finds the gaps in the submission, locates the missing pieces, and brings back the insights that matter for the decision. The agent does the external research work on the underwriter’s behalf.

By filling critical information gaps and applying research from the public web and connected third-party data sources into Sixfold’s overall risk assessment, underwriters are given a complete risk picture for more precise appraisal. Underwriters can view the exact origin of any piece of information, with clickable links that take them directly to the original source for verification or further detail.

The result? Quotes go out faster, underwriters make more consistent calls, and decisions get made without wondering what might have been missed.

“Research Agent has been one of our most anticipated features, especially for customers in specialty lines where extensive research is needed to get a complete picture of the risk. We expect the agent to save them at least two hours per case.”

- Alex Schmelkin, Founder & CEO at Sixfold

When Research Makes Or Breaks The Quote

Sixfold’s Research Agent is designed to support commercial lines of business where external data is essential in risk evaluation and quoting. Lines of business we’re seeing the highest demand for include:

- General Liability - Public web research to capture OSHA violations, litigation history, and negative press coverage.

- Cyber - 3rd party cyber threat intelligence plus broader public web research for cyber breach disclosures and corporate litigation involving cybersecurity lapses.

- E&S Property - Public and/or 3rd party data on location and climate based risks (flood, wildfire, crime, etc.), permit history, and company mentions in the news.

- Directors & Officers (D&O) - News on executive misconduct, class-action lawsuits, SEC filings, and shareholder news.

- Healthcare & Life Sciences - Public records of malpractice lawsuits, regulatory actions, patient safety concerns, and news coverage related to clinical trials or product safety.

While Competitors Prep, You Price

Research Agent delivers wins across the board, immediate time savings for underwriters and competitive advantages insurers.

“We’re seeing growing demand for research-heavy underwriting. With this launch, we accelerate the time to quote while elevating decision-making with richer context.”

- Lana Jovanovic, Head of Product at Sixfold

Focus on what matters: Those hours gained back per case? That's time underwriters can spend on what they do best: making smart risk decisions, strengthening broker relationships, and building customer connections instead of drowning in research tasks.

Speed wins deals: Reduced manual research burden means underwriters can respond faster to brokers, increase their quote volume, and capture more bound premiums. For carriers competing on both responsiveness and quality, this means winning more of the right business.

Same quality, every time: Every underwriter now has access to the same thorough research process. No more inconsistency based on who's handling the case or how much time they have to dig. Better decisions, whether it's one case or a thousand.

Improved portfolio performance: Sixfold’s comprehensive risk assessment, now with the added capability of public web research and connected third-party data, equips underwriters with a deeper understanding of each risk.

Contact Sixfold to see the Research Agent in action.

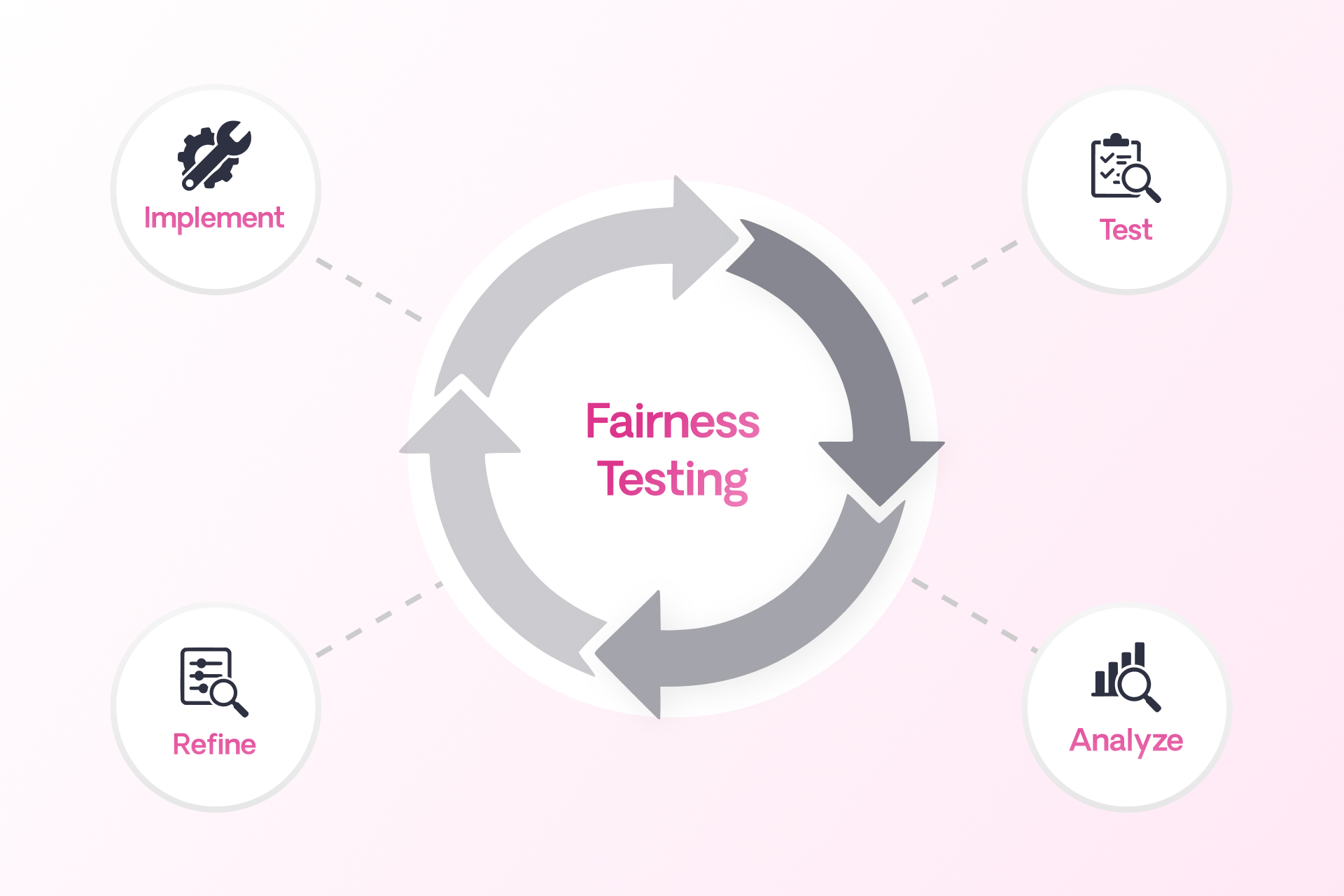

Sixfold's Approach to AI Fairness & Bias Testing

As AI becomes more embedded in insurance underwriting, ensuring fairness is a shared responsibility across carriers, vendors, and regulators. Sixfold's commitment to responsible AI means continuously exploring new ways to evaluate bias.

As AI becomes more embedded in the insurance underwriting process, carriers, vendors, and regulators share a growing responsibility to ensure these systems remain fair and unbiased.

At Sixfold, our dedication to building responsible AI means regularly exploring new and thoughtful ways to evaluate fairness.1

We sat down with Elly Millican, Responsible AI & Regulatory Research Expert, and Noah Grosshandler, Product Lead on Sixfold's Life & Health team, to discuss how Sixfold is approaching fairness testing in a new way.

Fairness As AI Systems Advance

Fairness in insurance underwriting isn’t a new concern, but testing for it in AI systems that don’t make binary decisions is.

At Sixfold, our Underwriting AI for life and health insurers don’t approve or deny applicants. Instead, it analyzes complex medical records and surface relevant information based on each insurer's unique risk appetite. This allows underwriters to work much more efficiently and focus their time on risk assessment, not document review.

“We needed to develop new methodologies for fairness testing that reflect how Sixfold works.”

— Elly Millican, Responsible AI & Regulatory Research Expert

While that’s a win for underwriters, it complicates fairness testing. When your AI produces qualitative outputs such as facts and summaries, rather than scores and decisions, most traditional fairness metrics won’t work. Testing for fairness in this context requires an alternative approach.

“The academic work around fairness testing is very focused on traditional predictive models, however Sixfold is doing document analysis,” explains Millican. “We needed to develop new methodologies for fairness testing that reflect how Sixfold works.”

“The academic work around fairness testing is very focused on traditional predictive models, however Sixfold is doing document analysis,” explains Millican. “We needed to develop new methodologies for fairness testing that reflect how Sixfold works.”

“Even selecting which facts to pull and highlight from medical records in the first place comes with the opportunity to introduce bias. We believe it’s our responsibility to test for and mitigate that,” Grosshandler adds.

While regulations prohibit discrimination in underwriting, they rarely spell out how to measure fairness in systems like Sixfold’s. That ambiguity has opened the door for innovation, and for Sixfold to take initiative on shaping best practices and contributing to the regulatory conversation.

A New Testing Methodology

To address the challenge of fairness testing in a system with no binary outcomes, Sixfold is developing a methodology rooted in counterfactual fairness testing. The idea is simple: hold everything constant except for a single demographic attribute and see if and how the AI’s output changes.2

“Ultimately we want to validate that medically similar cases are treated the same when their demographic attributes differ,”

— Noah Grosshandler, Product Manager @Sixfold

“We start with an ‘anchor’ case and create a ‘counterfactual twin’ who is identical in every way except for one detail, like race or gender. Then we run both through our pipeline to see if the medical information that’s presented in Sixfold varies in a notable or concerning way” Millican explains.

“Ultimately we want to validate that medically similar cases are treated the same when their demographic attributes differ,” Grosshandler states.

Proof-of-Concept

For the initial proof-of-concept, the team is focused on two key dimensions of Sixfold’s Life & Health pipeline.

1. Fact Extraction Consistency

Does Sixfold extract the same facts from medically identical underwriting case records that differ only in one protected attribute?

2. Summary Framing and Content Consistency

Does Sixfold produce diagnosis summaries with equivalent clinical content and emphasis for medically identical underwriting cases?

“It’s not just about missing or added facts, sometimes it’s a shift in tone or emphasis that could change how a case is perceived,” Millican explains. “We want to be sure that if demographic details are influencing outputs, it’s only when clinically appropriate. Otherwise, we risk surfacing irrelevant information that could skew decisions.”

Expanding the Scope

While the team’s current focus is on foundational fairness markers (race and gender), the methodology is designed to evolve. Future testing will likely explore proxy variables such as ZIP codes, names, and socioeconomic indicators, which might implicitly shape model behavior.

“We want to get into cases where the demographic signal isn’t explicit, but the model might still infer something. Names, locations, insurance types, all of these could serve as proxies that unintentionally influence outcomes,” Millican elaborates.

The team is also thinking ahead to version control for prompts and model updates, ensuring fairness testing keeps pace with an evolving AI stack.

“We’re trying to define what fairness means for a new kind of AI system,” explains Millican. “One that doesn’t give a single output, but shapes what people see, read, and decide.”

Sixfold isn’t just testing for fairness in isolation, it’s aiming to contribute to a broader conversation on how LLMs should be evaluated in high-stakes contexts like insurance, healthcare, finance, and more.

That’s why Sixfold is proactively bringing this work to the attention of regulatory bodies. By doing so, we hope to support ongoing standards development in the industry and help others build responsible and transparent AI systems.

“This work isn’t just about evaluating Sixfold, it’s about setting new standards for a new category of AI." Grosshandler concludes.

“This work isn’t just about evaluating Sixfold, it’s about setting new standards for a new category of AI. Regulators are still figuring this out, so we’re taking the opportunity to contribute to the conversation and help shape how fairness is monitored in systems like ours,” Grosshandler concludes.

Positive Regulatory Feedback

When we recently walked through our testing methodology and results with a group of regulators focused on AI and data, the feedback was both thoughtful and encouraging. They didn’t shy away from the complexity, but they clearly saw the value in what we’re doing.

“The fact that it’s hard shouldn’t be a reason not to try. What you’re doing makes sense... You’re scrutinizing something that matters.” said one senior policy advisor.

“The fact that it’s hard shouldn’t be a reason not to try. What you’re doing makes sense... You’re scrutinizing something that matters.”

— Senior Policy Advisor

One of the key themes that came up during the meeting was the unique nature of generative AI, and why it demands a different kind of oversight. As one senior actuary and behavioral data scientist put it: “Large language models are more qualitative than quantitative... A lot of technical folks don’t really get qualitative. They’re used to numbers. The more you can explain how you test the language for accuracy, the more attention it will get.”

That comment really resonated. It reflects the heart of our approach, we’re not just tracking metrics. We’re evaluating how language evolves, how facts can shift, and how risk is framed and communicated depending on the inputs.

The Road Ahead

Fairness in AI isn’t a fixed destination, it’s an ongoing commitment. Sixfold’s work in developing and refining fairness and bias testing methodologies reflects that mindset.

As more organizations turn to LLMs to analyze and interpret sensitive information, the need for thoughtful, domain-specific fairness methods will only grow. At Sixfold, we’re proud to be at the forefront of that work.

Footnotes

1While internal reviews have not surfaced evidence of systemic bias, Sixfold is committed to continuous testing and transparency to ensure that remains the case as we expand and refine our AI systems.

2To ensure accuracy, cases involving medically relevant demographic traits, like pregnancy in a gender-flipped case, are filtered out. The methodology is designed to isolate unfair influence, not obscure legitimate medical distinctions.

Meet Narrative: Your Shortcut to Risk Documentation

Sixfold’s latest launch introduces Narrative, a feature that helps underwriters document risk faster and more consistently. First rolled out with Zurich’s North America Middle Market team, Narrative is already helping standardize how risk is communicated across the organization.

No one became an underwriter because they love writing case documentation. But that’s where a huge amount of time goes today. Referral notes, peer review memos, audit documentation, written and rewritten, case after case.

- A single case can take an hour, often several hours, to document.

- Multiply that across a typical team handling hundreds of submissions each week. The result is thousands of hours spent each year on documentation alone.

- Underwriters are doing this work while balancing dozens of other tasks: reviewing new submissions, responding to brokers, preparing quotes, and managing existing accounts.

80% Automation, 20% Judgment

Sixfold’s Narrative feature automates and standardizes how risk is communicated across the organization. It automatically generates a risk narrative that matches each insurer’s appetite, tone and format, while giving underwriters the flexibility to apply their own judgment where it matters.

See below for a quick product walkthrough from Shirley Shen, Senior Product Manager @Sixfold:

The Narrative feature is built to:

1. Align with the insurer’s unique risk appetite

2. Surface key facts and risk insights that matter most

3. Adhere to required documentation standards and formats

“Think of all the documents underwriters have to create for administrative purposes. Anything that requires them to synthesize the risk overall. Sixfold is doing 80% of that now: bringing together all the facts. Then the underwriter just adds the last 20%, the judgment call.”

- Laurence Brouillette, Head of Customers and Partnerships @Sixfold

Proven with Zurich’s North America Team

The Narrative feature was developed and validated through the 2024 Zurich Innovation Championship (ZIC).

Over a 6-week sprint with Zurich’s North America Middle Market team, Sixfold:

- Partnered with 16 underwriters and was used in 80%+ of their live submissions

- Processed nearly 4,000 pages of submission and web data

- Achieved an average of 60 minutes time savings per submission

Following this success, Narrative is now rolling out across Zurich’s U.S. offices.

"We launched with four Zurich Middle Market offices in January 2025 and are now expanding Sixfold to dozens more offices countrywide.”

- Amy Nelsen, Head of Underwriting Operations, U.S. Middle Market @Zurich North America

How Zurich gives underwriters time back with Sixfold — featured in The Insurer.

AI That Actually Gets Used

Narrative is a great starting point for insurers looking to bring AI into underwriting workflows today. It takes the repetitive parts of the job off underwriters’ hands without requiring them to change how they already work.

“Sixfold streamlines the way underwriters receive information on new submissions, offering a holistic and simplified overview of a business’s operations and exposures right from the point of entry into our workflow.”

- Madison Chapman, Senior Middle Market Underwriter @Zurich North America

How Sixfold is transforming underwriting for Zurich’s U.S. Middle Market team.

When underwriters experience that impact immediately, with fewer hours spent writing up cases and fewer rounds of revisions, adoption happens easily. AI becomes part of the flow of work because it genuinely makes the day-to-day tasks so much easier.

Get in touch to see how Sixfold fits your underwriting workflow.