See The Complete Health Story Behind Every Application

Sixfold turns complex medical evidence into connected insights for faster, more consistent decisions.

Underwriters spend hours every day manually reviewing lengthy medical records to find what they actually need to make a decision.

Sixfold changes that, applying your underwriting guidelines to instantly identify what matters on every case.

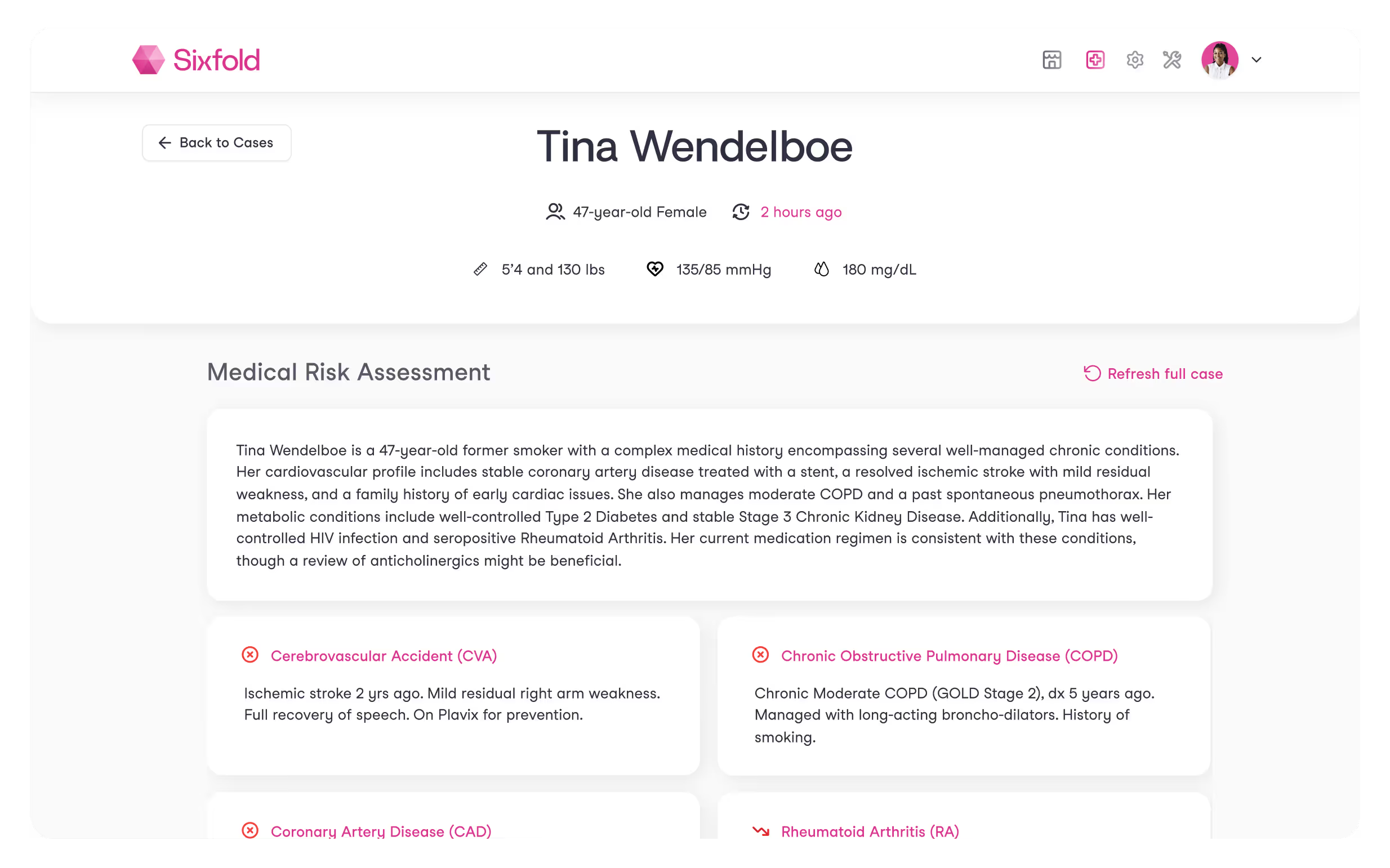

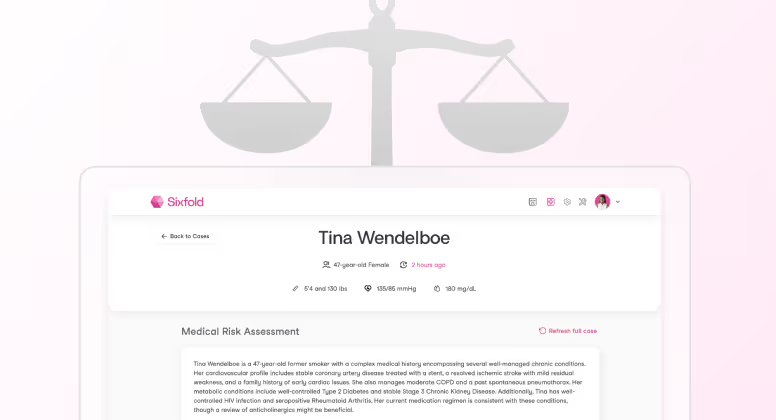

The complete health picture in one place

Sixfold brings together an applicant's medical history, connecting medications, labs, procedures, and diagnoses under each condition so underwriters can quickly understand the complete clinical picture.

AI agent reads every medical record APS, EHRs, lab results to understand the clinical story behind each condition

Flags high-risk diagnoses according to your underwriting manuals

Surfaces clinical insights that show the history, severity, and progression of each condition

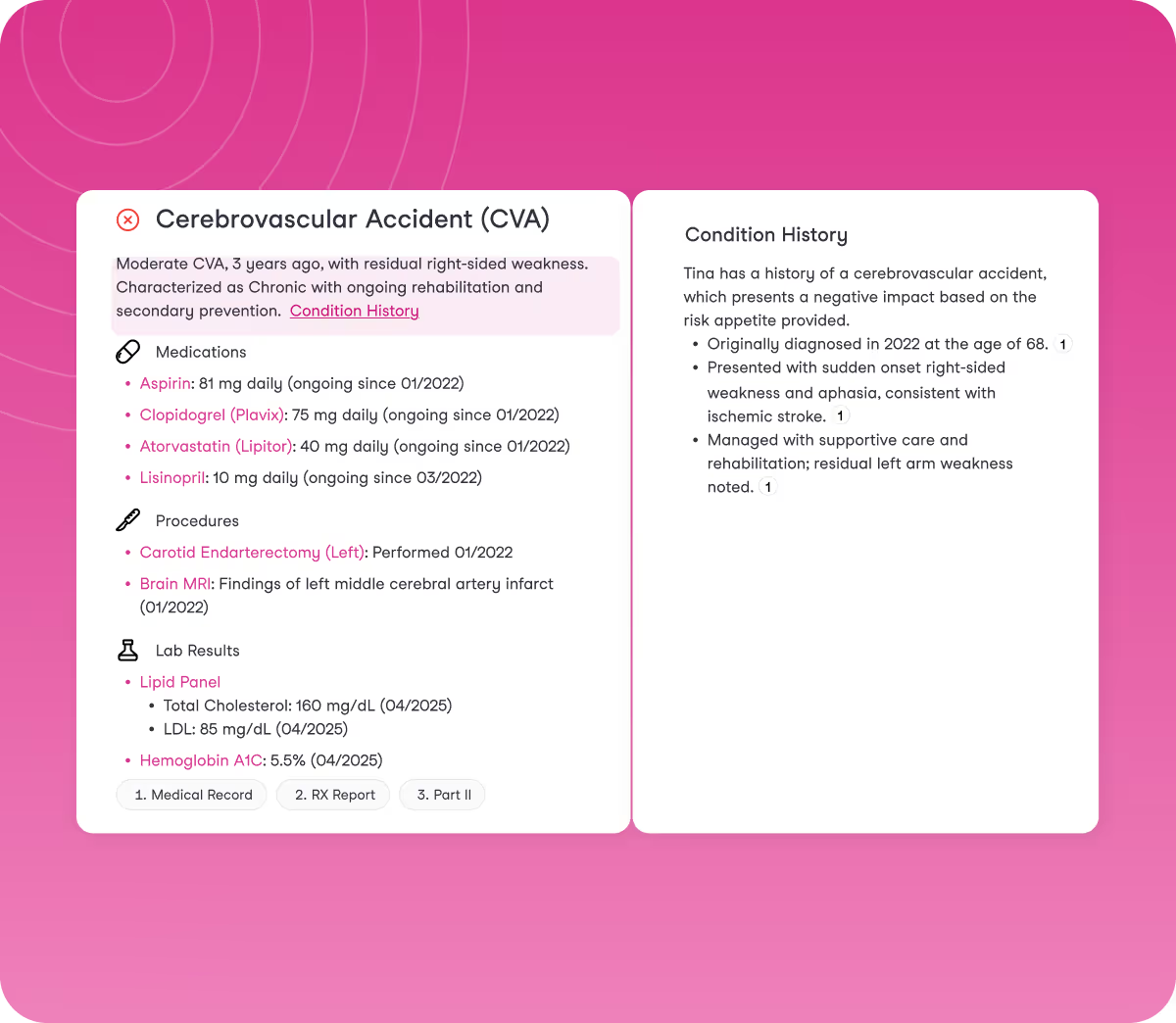

Assess even the most complex cases

Sixfold reviews hundreds of pages across APS, EHRs, lab results, and medical exams, pulling critical details from complex and unstructured documents. It delivers insights aligned with your underwriting guidelines and saves underwriters hours of manual review.

Identifies hidden, hard-to-spot details across Life & Health, and Disability cases

Spots discrepancies between application data and medical records, and highlights new facts as they come in

Goes beyond APS summaries by showcasing what matters most for your underwriting decisions

.avif)

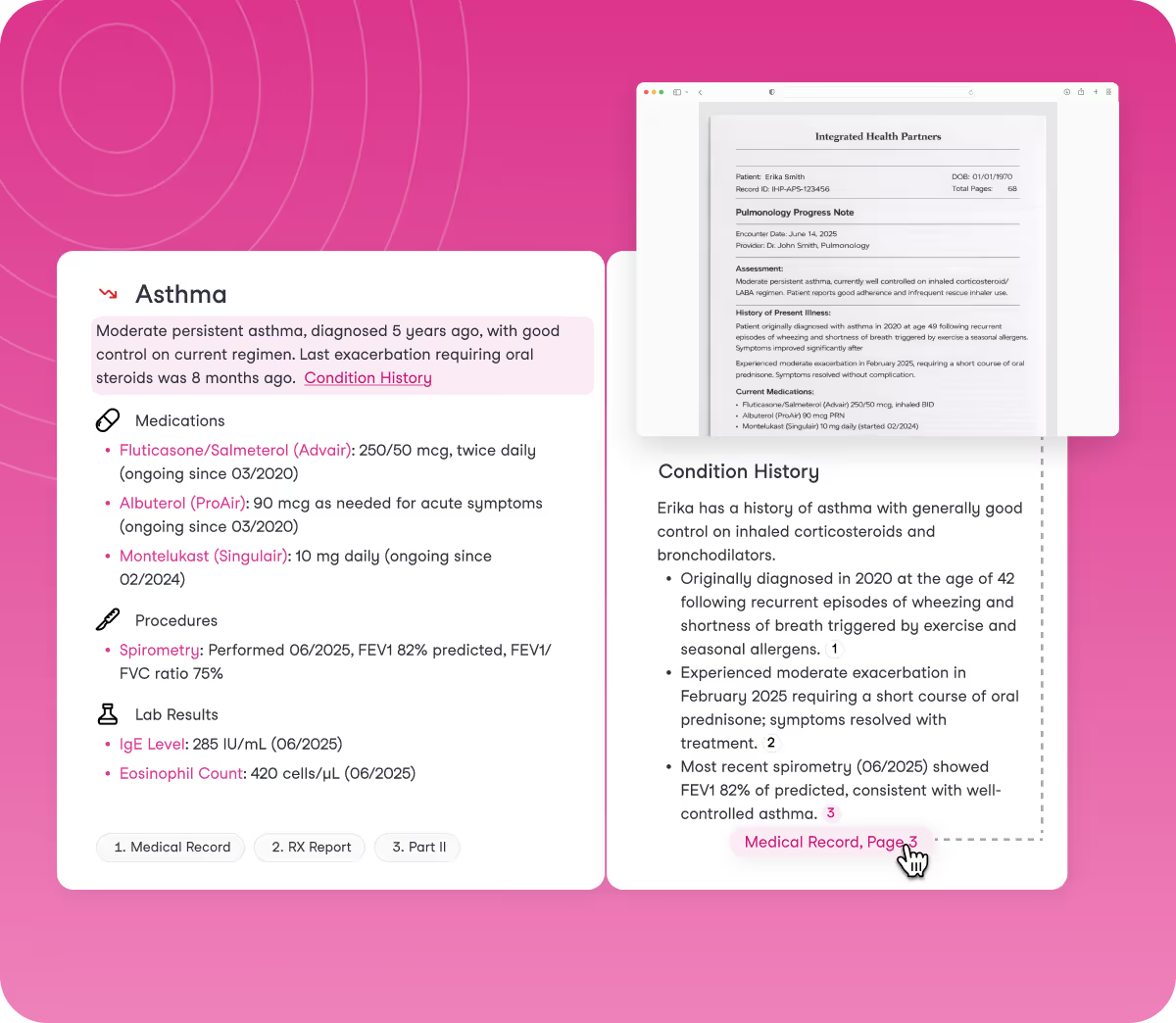

Contextual insights with full traceability

Every insight that Sixfold presents comes with clear documentation. Sixfold shows exactly where information comes from and how it connects, so underwriters can verify details instantly and maintain complete traceability.

In-line citations pointing directly to source documents in one click

Continuous fairness and bias testing to ensure insights remain unbiased

In-app feedback enables underwriters to improve insights, ensuring continuous improvements

FITS YOUR WORKFLOW

Works with your existing systems

Sixfold works where you work, fitting into your underwriting process and integrating with underwriting platforms, case management systems, and policy admin tools.

.avif)

Results you’ll see with Sixfold

50%

Improved Efficiency

Faster case processing time. From manually reviewing 100s of pages of medical records to a clear, connected health story in minutes.

45%

Faster Onboarding

Shorter time to full caseload. New underwriters get productive in weeks, not months.

30%

More GWP per Underwriter

Underwriter productivity improvements. Underwriters process more submissions without the manual work.

“Sixfold reduces underwriter review time by 50% for Guardian”

Head of Individual Markets Underwriting Transformation

HIPAA, SOC 2, and GDPR certified with single-tenant environments for confidentiality

AI you can Trust

See our approach to responsible AI:

.avif)

.avif)